Exploring Google Gemini: A Detailed Overview of the New Generative AI Platform

The landscape of artificial intelligence is undergoing a transformative shift. No longer content with crunching numbers or mimicking human speech, the next generation of AI aims to spark creativity, ignite innovation, and fundamentally reshape the way we interact with the world. Enter Google Gemini, a game-changer in the field of generative AI, poised to revolutionize a vast array of sectors from art and entertainment to scientific research and technological advancement.

What is Google Gemini?

Gemini stands at the forefront of generative AI, a collaborative venture between Google’s distinguished AI research labs—DeepMind and Google Research. This innovative project introduces three distinct models, each crafted to address diverse needs and functionalities with finesse:

- Gemini Ultra: The Flagship Model

- Positioned as the flagship of the Gemini family, Ultra promises an unparalleled generative AI experience with advanced capabilities.

- Gemini Pro: The “Lite” Model

- Offering a streamlined yet powerful generative AI experience, Pro is the lite variant designed for versatility and efficiency.

- Gemini Nano: Mobile Efficiency

- Breaking new ground in mobile AI, Nano is a compact and distilled model optimized for seamless operation on mobile devices, exemplified by its compatibility with the Pixel 8 Pro.

Natively Multimodal Excellence

Gemini’s standout feature lies in its status as a “natively multimodal” AI model family. This unique characteristic sets it apart from predecessors like Google’s LaMDA, allowing Gemini to transcend the limitations of textual constraints. Unlike LaMDA, which exclusively processes text, Gemini’s capabilities extend to audio, images, videos, and diverse codebases, fostering a holistic understanding of multimodal inputs.

Natively Multimodal Excellence

Gemini’s standout feature lies in its designation as a “natively multimodal” AI model family. This unique characteristic distinctly sets it apart from predecessors like Google’s LaMDA, enabling Gemini to transcend the limitations imposed by textual constraints. Unlike LaMDA, exclusively geared towards processing text, Gemini’s capabilities extend seamlessly to audio, images, videos, and diverse codebases, fostering a comprehensive understanding of multimodal inputs.

Training Beyond Boundaries

Every Gemini model undergoes an extensive training regimen, involving both pre-training and fine-tuning on a diverse dataset. This dataset encompasses audio, images, videos, extensive codebases, and multilingual text. Through this nuanced training approach, Gemini gains the ability to operate seamlessly across various modalities, showcasing unparalleled versatility beyond models confined to textual data.

The Distinctiveness of Gemini Models

While Gemini’s proficiency in handling images, audio, and other modalities currently faces some limitations, it signifies a considerable improvement compared to models restricted to textual domains. The capability of Gemini models to comprehend and generate content across different modalities positions them as versatile tools with vast potential for innovation.

In contrast to models like LaMDA, constrained to the realm of text-based outputs, Gemini emerges as a multifaceted generative AI family. This heralds a new era of possibilities extending beyond the boundaries of conventional language models, promising a future where AI seamlessly integrates with diverse forms of human expression and creativity.

Bard vs. Gemini: Unraveling the Distinctions

In examining Google’s branding approach, the relationship between Bard and Gemini may appear intricate. Google’s branding strategy, at times, lacks absolute clarity, contributing to potential confusion. To clarify, Bard functions as an interface facilitating access to specific Gemini models, resembling an app or client for Gemini and other GenAI models. In contrast, Gemini constitutes a family of models, separate from an app or front end. There is no standalone Gemini experience, and the likelihood of its emergence in the future is low.

To draw a parallel with OpenAI’s products, Bard aligns with ChatGPT, the conversational AI app, while Gemini corresponds to the underlying language model powering it—specifically GPT-3.5 or 4 in the case of ChatGPT.

Adding to the complexity, Gemini operates independently from Imagen-2, a text-to-image model. Understanding the relationship between these entities and their alignment with Google’s overarching AI strategy may not be immediately evident. If you find yourself seeking clarity, rest assured that you’re not alone in navigating this intricacy.

Exploring Gemini’s Capabilities: A Look into Its Functionality

Given the multimodal nature of Gemini models, they possess the theoretical capacity to undertake various tasks, ranging from transcribing speech to captioning images and videos, to generating artwork. While only a few of these capabilities have materialized into products, Google holds the promise of unveiling more functionalities in the not-too-distant future.

However, skepticism arises considering Google’s track record. The original Bard launch fell short of expectations, and a recent video showcasing Gemini’s capabilities was found to be heavily doctored. Despite Gemini’s availability in a limited form today, there remains uncertainty about the fulfillment of Google’s claims.

Assuming Google’s transparency in its assertions, let’s delve into the anticipated capabilities of different tiers of Gemini models upon their release:

Gemini Ultra

Gemini Ultra, the foundational model on which others are built, is currently accessible to a select set of customers within specific Google apps and services. Google claims that Gemini Ultra can assist with tasks like physics homework, providing step-by-step solutions and identifying potential errors in filled-in answers. It’s also touted for tasks such as recognizing relevant scientific papers, extracting information, and updating charts with generated formulas based on recent data.

While Gemini Ultra technically supports image generation, this feature won’t be available at the model’s launch. Google suggests this is due to the complexity of the mechanism, which outputs images ‘natively’ without an intermediary step.

Gemini Pro

Unlike Gemini Ultra, Gemini Pro is publicly available today, with its capabilities varying depending on the platform. In Bard, where Gemini Pro first launched in a text-only form, it supposedly surpasses LaMDA in reasoning, planning, and understanding capabilities. Independent studies have indicated Gemini Pro’s superiority over OpenAI’s GPT-3.5 in handling longer and more complex reasoning chains.

However, challenges persist, with the model struggling in certain areas, particularly with math problems involving multiple digits. Users have reported instances of bad reasoning and mistakes, especially in factual accuracy. Google has pledged improvements, but the timeline remains unclear.

Gemini Pro is also accessible via API in Vertex AI, Google’s fully managed AI developer platform. This allows text input and text output, with an additional endpoint, Gemini Pro Vision, capable of processing both text and imagery, including photos and videos. Developers within Vertex AI can customize Gemini Pro for specific contexts through fine-tuning or ‘grounding,’ and integration with external third-party APIs is also possible for specific actions.

In ‘early 2024,’ Vertex customers will gain the capability to leverage Gemini Pro for developing custom conversational voice and chat agents, commonly known as chatbots. Gemini Pro will also be integrated as an option for driving search summarization, recommendation, and answer generation features within Vertex AI. This integration will involve drawing on documents across modalities (e.g., PDFs, images) from diverse sources (e.g., OneDrive, Salesforce) to fulfill user queries effectively.

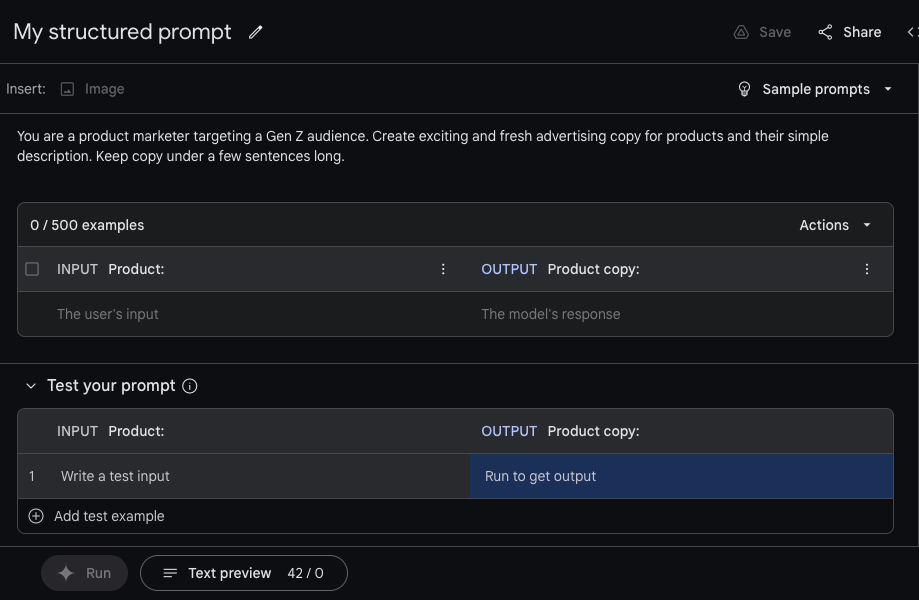

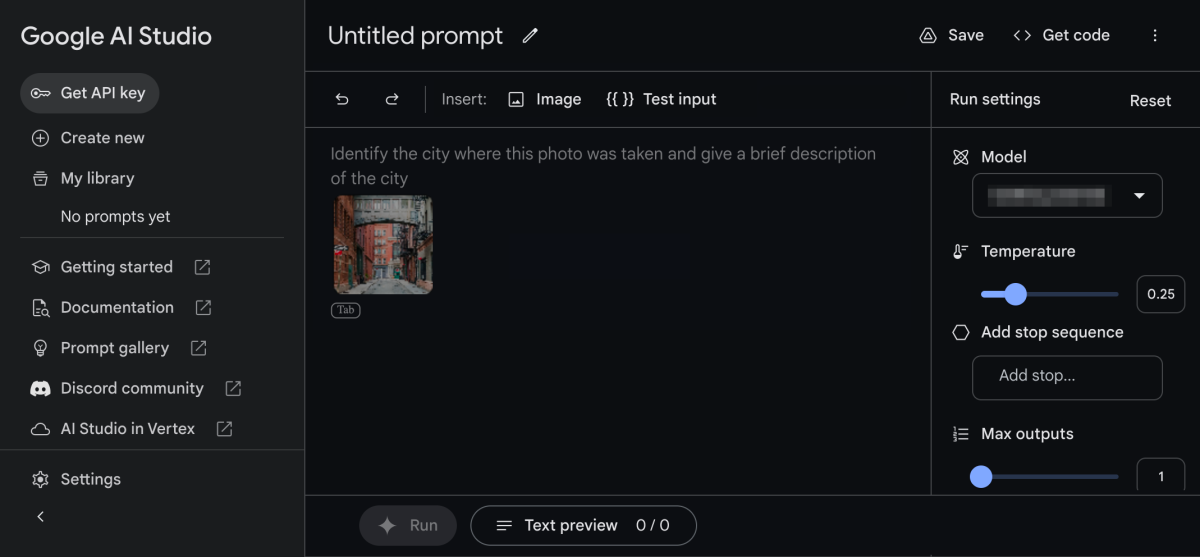

In AI Studio, a dedicated web-based tool for app and platform developers, users benefit from distinct workflows. These workflows facilitate the creation of freeform, structured, and chat prompts using Gemini Pro. Developers not only have access to Gemini Pro but also the Gemini Pro Vision endpoints. This dual accessibility empowers developers to finely control the model temperature, effectively managing the output’s creative range.

Gemini Nano: Unlocking Efficiency for On-Device AI

Gemini Nano emerges as a compact counterpart to the Gemini Pro and Ultra models, offering the efficiency to run directly on select smartphones rather than relying on external servers. Currently, it is integrated into two features on the Pixel 8 Pro: Summarize in Recorder and Smart Reply in Gboard.

In the Recorder app, users can seamlessly record and transcribe audio with the added benefit of a Gemini-powered summary for recorded conversations, interviews, presentations, and more. Notably, these summaries are accessible even without a signal or Wi-Fi connection, ensuring privacy as no data leaves the user’s phone during the process.

Within Gboard, Google’s keyboard app, Gemini Nano is available as a developer preview. It drives the Smart Reply feature, designed to suggest the next conversational step when engaging in messaging apps. Although initially limited to WhatsApp, Google plans to expand this feature to more apps in 2024, enhancing the versatility of Gemini Nano.

Assessing Gemini Against OpenAI’s GPT-4: Unveiling the Verdict

The true standing of the Gemini family in comparison to OpenAI’s GPT-4 remains uncertain until Google launches Ultra later this year. Google asserts advancements beyond the state of the art, typically represented by OpenAI’s GPT-4.

Google has emphasized Gemini’s superiority in various benchmarks, asserting that Gemini Ultra surpasses current state-of-the-art results on “30 of the 32 widely used academic benchmarks in large language model research and development.” Additionally, Google claims that Gemini Pro outperforms GPT-3.5 in tasks such as content summarization, brainstorming, and writing.

However, the debate about whether benchmarks genuinely reflect a superior model remains open. While Google highlights improved scores, these seem only marginally better than OpenAI’s corresponding models. Early user feedback and academic observations raise concerns, noting that Gemini Pro tends to provide inaccurate facts, struggles with translations, and offers subpar coding suggestions. The true measure of Gemini’s prowess will likely unfold with broader usage and scrutiny in the coming months.

Understanding the Pricing Structure of Gemini

Gemini Pro currently offers free usage within Bard, AI Studio, and Vertex AI. However, upon the conclusion of the preview phase in Vertex, a pricing model will be implemented.

For Gemini Pro in Vertex, the cost will be $0.0025 per character for input and $0.00005 per character for output. Vertex customers will be billed per 1,000 characters, translating to approximately 140 to 250 words. For models like Gemini Pro Vision, the pricing will be per image, set at $0.0025.

To illustrate, let’s consider a 500-word article, equating to approximately 2,000 characters. Summarizing this article using Gemini Pro would incur a cost of $5. Conversely, generating an article of similar length would cost $0.1. It’s crucial to be aware of these pricing details when utilizing Gemini Pro in Vertex to manage and optimize costs effectively.

Exploring Gemini’s Availability: Where to Experience Gemini?

Gemini ProGemini Pro

The most accessible entry point to explore Gemini Pro is within Bard. Currently, a fine-tuned version of Gemini Pro is actively responding to text-based queries in English within Bard in the U.S., with plans to expand language support and availability to additional countries in the future.

For a preview experience, Gemini Pro is also accessible in Vertex AI through an API. The API is currently free to use “within limits” and supports 38 languages and regions, including Europe. Notably, it incorporates features such as chat functionality and filtering.

Developers can also find Gemini Pro in AI Studio. This service allows developers to iterate prompts and create Gemini-based chatbots, obtaining API keys for integration into their apps or exporting the code to a more comprehensive integrated development environment (IDE).

In the coming weeks, Duet AI for Developers, Google’s suite of AI-powered assistance tools for code completion and generation, will integrate a Gemini model. Additionally, Google plans to introduce Gemini models to development tools for Chrome and its Firebase mobile development platform in early 2024.

Gemini Nano

Gemini Nano is currently available on the Pixel 8 Pro and is expected to extend to other devices in the future. Developers keen on incorporating the model into their Android apps can sign up for a sneak peek.

Stay tuned for continuous updates on the latest developments in this post.